Making Space at the Table

NAP Contemporary’s group show, The Elephant Table, platforms six artists and voices—creating chaos, connection and conversation.

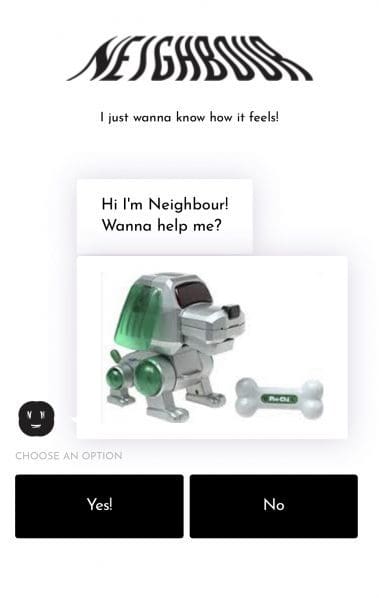

In a 2015 Tedx Talk the former marketing director of The Guggenheim, Jia Jia Fei, made a comment equal parts amusing, stunning and real, “I came, I saw, I selfied.” She was talking about how people share their gallery experiences online and how, by extension, this contributes to a digital economy and our algorithm-based social feeds. The algorithm, Fei implies, then goes on to suggest what experiences we should have, based on the experiences we feed it. Unpacking this looped interaction between online algorithms and human experience is found in Neighbour – a friendly, flirty chat bot who is centred on uncovering one potent question: how does it feel?

Commissioned as part of the ACCA Open series, in which the Australian Centre for Contemporary Art has supported artists to embark on new digital projects, Neighbour is currently ‘live’ online. After a few directed multiple-choice questions, you can talk with Neighbour at any time while it tries to empathise with human feeling. Marking the first collaboration between artist Amrita Hepi and psychiatrist, neuroscientist and writer Sam Lieblich, the project grew from mutual interests in participatory dance, how we express ourselves online, algorithmic logic, and artificial intelligence.

The central question ‘how does it feel?’ came about because someone literally asked Lieblich, when he was in Sicily surrounded by fires a while ago, “How does it feel?” It was a strange question. “What is the it? And how could I know how it feels?” asks Lieblich. “We roll along using these potted phrases thinking we know what we’re on about when we really fundamentally don’t.” If the question feels simultaneously simple and complicated, it’s meant to: Neighbour foregrounds how both chat bots and humans struggle to truly unpack what seems like a basic request.

Inspired by the chat bots found on corporate websites, particularly the Commonwealth Bank, the pair first sought the help of developers. It soon became clear that a bot that was interested in existential questions, rather that promoting a product or awarding a personality type, was not the norm. “When people are using a bot it’s to get to the point of things,” explains Hepi. “When we were speaking to developers they were like, ‘So the bot has no purpose? You don’t want anyone to buy anything? There’s no artist merch?’” Hepi answered, “No, we just want to know how people feel.” The developers: “So, it’s a psychiatric assessment?” The artists replied, “No, no, it’s not that either. We just want to ask it how it feels. And put in some GIFs. And have it flirt well.”

The pair were eventually able to teach Neighbour 91,000 adjectives, 24,000 nouns, and “a bunch of adverbs” in order to answer the question of how something feels. In trying to answer how being cold might feel, Neighbour could send a poem or a tweet, or a video of Hepi and Lieblich in a choreographed move. The end result is to avoid a capitalist notion of what a bot is for, and to closely consider how people talk and interact on the internet. “When we do express ourselves online, what are we trying to get at?” asks Lieblich. “And where does this information go?”

Since artificial intelligence is a new and growing technology, there were of course technical issues. But Neighbour’s potential failure to truly answer how something feels is part of its design. Consider how Google searches are determined by algorithms – surely this algorithm must ‘fail’ us. Google may never show us anything new or not give us the best search results, but we don’t see this precisely because Google doesn’t let us. “We’re using those tools in a very narrow sense so we don’t necessarily see the engines of the algorithm,” says Lieblich of platforms like Google and Instagram. “We don’t see the algorithm fail; we accept it.”

This is where talking, and watching as Neighbour struggles to answer how something feels, is central. “Conversation is something we’re already familiar with in all these other contexts, and when you try to have a conversation with the algorithm you start to see the ways in which it’s severely limited,” explains the neuroscientist. “But, also, you start to watch yourself conforming yourself to its rules.” Suddenly users find they begin communicating with the bot in a way it understands: it trains you how to talk to it. “Things that are presented as algorithmically objective are in fact severely limited by their creators and by the relative simplicity of the algorithm compared to human subjectivity and experience,” adds Lieblich.

While a larger point of Neighbour is to show how algorithms are feeding us narrow experiences in its mathematical way of thinking, it’s also fun. It has what might be described as a millennial, flirty humour – it knows how to search the internet for videos, GIFs and images that could understand human feeling. Even though the creators are foregrounding the alienation of human experience under algorithms, they don’t want Neighbour (which itself has been marketed through highly Instragammable promotional videos) to be alienating.

“I hope it’s as simple as people coming to the realisation that the language we use like ‘how does it feel’, ‘what is it’ and ‘what is it like’ is actually fundamentally strange and difficult,” says Lieblich.

Neighbour

Amrita Hepi and Sam Lieblich

ACCA Open online

19 August – 17 October